Basic Information

-

Instructor: Jie Wang

-

Email: jiewangx@ustc.edu.cn

-

Time and Location: Tues, 14:00 PM - 15:35 PM (GT-B111). Thur, 15:55 PM - 17:30 PM (GT-B211)

-

TAs:

- YinQi Bai (yinqibai@miralab.ai)

- Runquan Gui (runquangui@miralab.ai)

- Zhiwei Zhuang(zhiweizhuang@miralab.ai)

- Xilin Xia(xilinxia@miralab.ai)

- Yuhang Jiang(yuhangjiang@miralab.ai)

Lectures

All course materials will be shared via this page.

| Index | Date | Topic | Lecture Notes | Homework |

|---|---|---|---|---|

| 00 | Sept 03, 2024 | Introduction | Lec00-Introduction.pdf | |

| 01 | Sept 10, 2024 | Review of Mathematics I | Lec01-MathematicalReview.pdf | HW01.pdf |

| 02 | Sept 12, 2024 | Review of Mathematics II | ||

| 03 | Sept 19, 2024 | Linear Regression I | Lec02-LinearRegression.pdf | |

| 04 | Sept 24, 2024 | Linear Regression II | ||

| 05 | Sept 26, 2024 | Bias-Variance Decomposition | Lec03-BiasVarianceDecomposition.pdf | HW02.pdf |

| 06 | Oct 08, 2024 | Convex Sets I | Lec04-ConvexSets.pdf | |

| 07 | Oct 10, 2024 | Convex Sets II | ||

| 08 | Oct 15, 2024 | Separation Theorems I | Lec05-SeparationTheorems.pdf | |

| 09 | Oct 17, 2024 | Separation Theorems II | ||

| 10 | Oct 22, 2024 | Convex Functions I | Lec06-ConvexFunctions.pdf | |

| 11 | Oct 24, 2024 | Convex Functions II | HW03.pdf | |

| 12 | Oct 29, 2024 | Subdifferential I | Lec07-Subdifferential.pdf | |

| 13 | Nov 05, 2024 | Subdifferential II | HW04.pdf | |

| 14 | Nov 07, 2024 | Convex Optimization Problems I | Lec08-ConvexOptimizationProblems.pdf | |

| 15 | Nov 12, 2024 | Convex Optimization Problems II | ||

| 16 | Nov 14, 2024 | Decision Tree | Lec09-DecisionTree.pdf | |

| 17 | Nov 19, 2024 | Naive Bayes Classifier | Lec10-NaiveBayesClassifier.pdf | |

| 18 | Nov 21, 2024 | Neural Networks | Lec14-NeuralNetworks.pdf | |

| 19 | Nov 26, 2024 | Convolutional Neural Network | Lec15-ConvolutionalNeuralNetwork.pdf | |

| 20 | Nov 28, 2024 | Logistic Regression | Lec11-LogisticRegression.pdf | HW05.pdf |

| 21 | Dec 03, 2024 | SVM I | Lec12-SVM1.pdf | |

| 22 | Dec 05, 2024 | SVM II | Lec13-SVM2.pdf | |

| 23 | Dec 10, 2024 | Principal Component Analysis | Lec16-PrincipalComponentAnalysis.pdf | |

| 24 | Dec 12, 2024 | Reinforcement Learning I | Lec17-RL_DeterministicEnvironment.pdf | HW06.pdf |

| 25 | Dec 17, 2024 | Reinforcement Learning II | Lec18-RL_StochasticEnvironment.pdf | HW07.pdf |

Project

Description

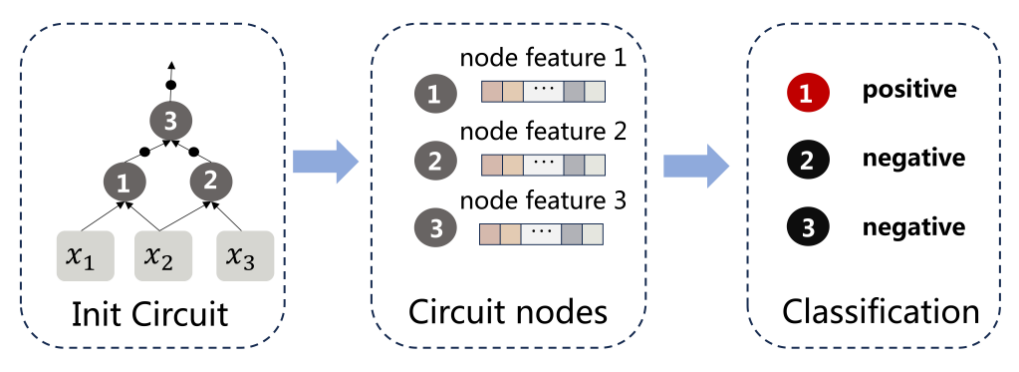

Logic Optimization is one of the most important EDA tools in the front-end work flow, which aims to optimize circuits—modeled by directed acyclic graphs—with reduced size and/or depth. A key task in Logic Optimization is to classify the circuit nodes as either ‘positive’ or ’negative’, which has a significant impact on the optimization performance. In this project, you task is to implement a model for circuit node classification.

Dataset

A widely-used open-source circuit benchmark EPFL , consisting of 15 circuits, is provided for training. You can download the training dataset (in .npy format) from here. The dataset contains node features and their corresponding labels. Please refer to the dataset class in main.py to learn how to extract the features and labels needed for training. Each node is represented by a 69-dimensional feature vector, and its label is either 0 or 1. The specific node feature is shown in the following table.

| Feature Index | Meaning |

|---|---|

| 0 | The number of the fanin nodes |

| 1 | The number of the fanout nodes |

| 2 | The level of the node |

| 3 | The levelR of the node |

| 4 | The node ID |

| 5-68 | The output of the truth table for the node (binary values 0 or 1). |

Requirements

-

Do NOT use any autograd tool or any optimization tool from machine learning packages. You are supposed to implement your algorithm from scratch. For example, if you want to use a neural network, you are expected to implement both forward and backward passes. You can use the packages in the WhiteList. TAs will update the Whitelist if your requirements are reasonable.

-

You can work as a team with no more than three members in total. Please list the percentage of each member’s contribution in your report, e.g., {San Zhang: 30%, Si Li: 35%, Wu Wang: 35%}.

-

You are provided with two

.pyfile namedmain.pyandFor_TA_test.py. We have implemented the data collection code and you are supposed to implement your algorithm. Themain.pyis used for training your model and theFor_TA_test.pyis used for the TA to evaluate your model. We provide an example code here. For detailed requirements, please refer to the comments in our code. -

You are supposed to send a package named

[your student ID].zip, which contains the[your student ID]directory organized as follows to ml2024fall_ustc@163.com.[your student ID] |- main.py |- For_TA_test.py |- ... (your code and model) |- [your student ID]-report.pdf (your report) -

For a teamwork, please use the team leader’s student ID in the package name and submit the package by your team leader.

-

Remember to save the trained model. You are supposed to send your trained model to the aforementioned e-mail address.

-

Please submit a detailed report. The report should include all the details of your projects, e.g., the implementations, the experimental settings and the analysis of your results.

Grading

The grading process is calculated as follows:

-

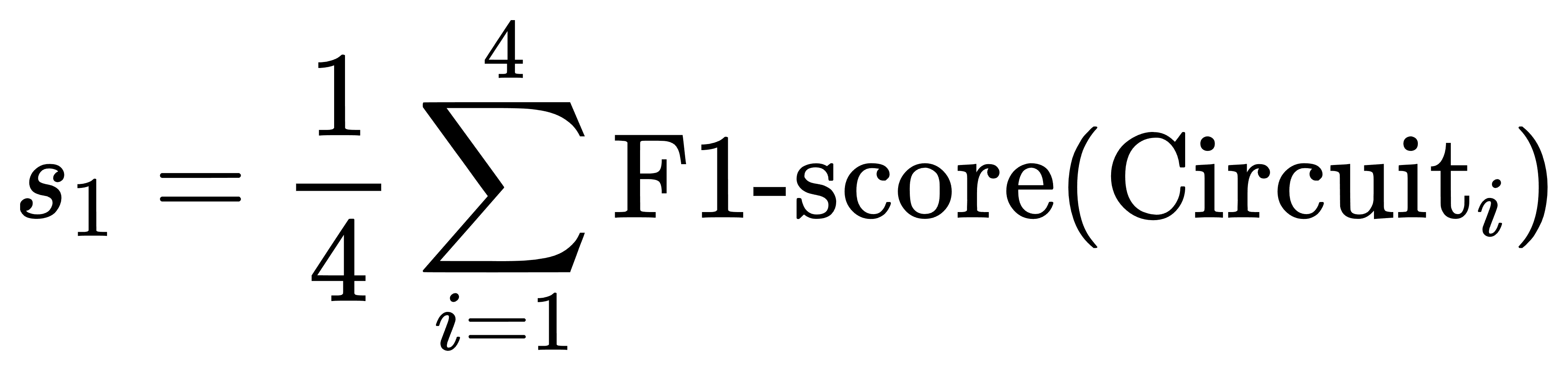

Generalization Evaluation on Open-Source Benchmarks:

Give the EPFL benchmark, you have to use a leave-one-domain-out cross-validation strategy, where one circuit is selected as the test circuit, and the remaining circuits are used for training. The four test circuits are Hyp, Log2, Multiplier, and Square. The first score s1 is calculated as the average F1-score across these four test circuits:

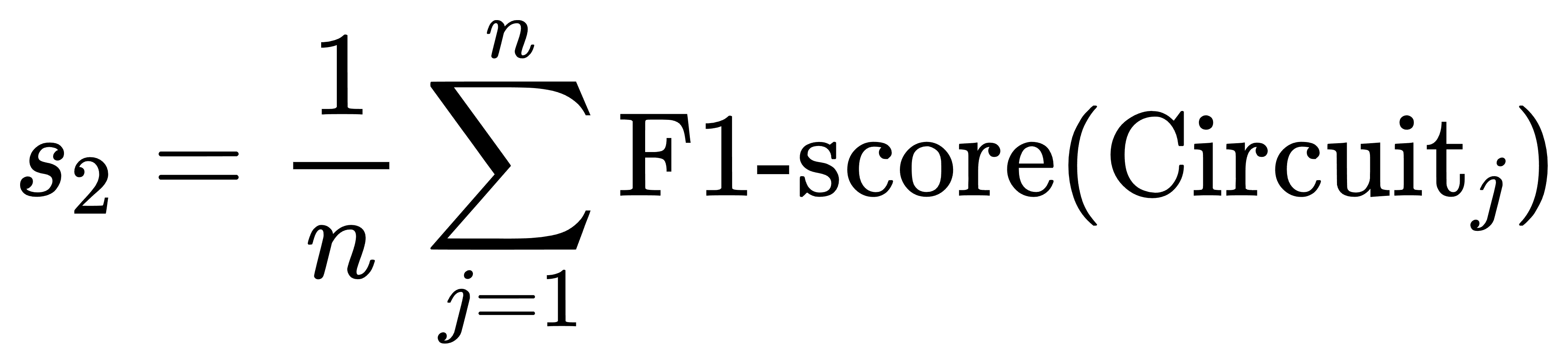

- Cross-Benchmark Evaluation:

Give the EPFL benchmark, you have to first train a model. Then, the TA will evaluate the performance of your model on several test circuits from another open-source benchmark. The second score s2 is computed as the average F1-score across these three test circuits:

-

Final Score Calculation:

The overall score s combines s1 and s2 with weights of 0.4 and 0.6, respectively, reflecting the relative importance of the two evaluations:

System Requirements

We will evaluate your model on a GeForce RTX 2080 (about 24G memory) under Ubuntu 18.04 system. Please limit the size of your model to avoid OOM.

Due Day

- Team leaders should inform the TAs about your team members before 23:59 PM, December 8th , 2024.

- Please submit your report, code and trained model before 23:59 PM, January 19, 2024.

Page view (from Sep 3, 2024):