Basic Information

- Instructor: Jie Wang

- Email: jiewangx@ustc.edu.cn

- Time and Location: R 3:55 PM - 6:20 PM (3A111)

- TAs:

Lectures

All course materials will be shared via the Dingding group.

| Index | Date | Topic | Lecture Notes | Homework |

|---|---|---|---|---|

| 00 | Feb 20, 2020 | Introduction | Lec00.pdf | |

| 01 | Feb 27, 2020 | Linear Regression | Lec01.pdf, Lec01slides.pdf | HW1.pdf |

| 02 | Mar 05, 2020 | Convex Sets & Convex Functions | Lec02.pdf | HW2.pdf |

| 03 | Mar 12, 2020 | Gradient Descent | Lec03.pdf | HW3.pdf |

| 04 | Mar 19, 2020 | Naive Bayes + Guest Lecture on Knowledge Graph |

Lec04.pdf | |

| 05 | Mar 26, 2020 | Logistic Regression + Stochastic Gradient Descent |

Lec05.pdf | HW4.pdf |

| 06 | Apr 02, 2020 | SVM + Lagrangian Duality I | Lec06.pdf | |

| 07 | Apr 09, 2020 | SVM + Lagrangian Duality II | Lec07.pdf | HW5.pdf |

| 08 | Apr 16, 2020 | Decision Tree + Neural Networks | Lec08.pdf, Lec09.pdf, Lec10.pdf | |

| 09 | Apr 23, 2020 | Elementary Learning Theory | Lec11.pdf | HW6.pdf |

| 10 | Apr 30, 2020 | Elementary Reinforcement Learning I + Talks on Applications of RL |

Lec12.pdf | |

| 11 | May 07, 2020 | Elementary Reinforcement Learning II | Lec13.pdf | HW7.pdf |

| 12 | May 14, 2020 | Principal Component Analysis | Lec14.pdf | HW8.pdf |

| 13 | May 21, 2020 | Discussion on Homeworks |

Project

Description

In this project, you are expected to implement a Reinfocement Learning algorithm to teach an agent to play an Atari game, Lunar Lander or River Raid.

Environment

Lunar Lander and River Raid has been implemented as the Reinforcement Learning environment in OpenAI Gym.

You may want to install Gym by running pip install gym[atari,box-2d].

Moreover, You can get more information about these two environments from

Riverraid-v0 and

LunarLander-v2.

You may want to use

import gym

env = gym.make('LunarLander-v2')

to create the Lunar Lander environment and

import gym

env = gym.make('Riverraid-v0')

to create the River Raid environment.

to create the River Raid environment.

Agent

We provide you with a simple code to evaluate the agent which takes a random action at each step.

You may obtain the code from here.

To run the code, go into the ml_spring_2020 directory and execute python scripts/main.py.

The default environment is River Raid. You may modify the environment in configs/main_setting.py.

We define a base class called RL_alg in src/alg/RL_alg.py.

You are supposed to implement your algorithm in src/alg/[your student ID] directory inheriting from RL_alg.

We give an example in src/alg/PB00000000.

You are supposed to finally upload src/alg/[your student ID] directory.

TAs will use the provided code to evaluate your trained agent.

Requirements

- Train an agent to play Lunar Lander or River Raid. If you choose Lunar Lander, then the final score of this project is less than 18 pts.

- Do NOT use any autograd tools or any optimization tools in machine learning packages. You are supposed to implement your Reinforcement Learning algorithm from scratch. For example, if you want to use the neural network, you are expected to implement both the neural network and the backpropagation. You can use the libraries in the WhiteList. TAs will update the Whitelist if you have reasonable advices.

- Remember to save the trained agent. You are supposed to upload your trained agent onto the Blackboard system. TAs will use the provided code to evaluate your trained agent.

- Please submit a detailed technical report. The technical report should include a learning curve plot showing the performance of your algorithm. The x-axis should correspond to number of time steps and the y-axis should show the mean 100-episode reward as well as the best mean reward. Besides, the technical report should include all the details of your projects, like the implementations, the experimental settings, the analysis of your results, etc.

- You can find partners to work as a team with no more than three members in total. Please list the percentage of each member’s contribution in your report, e.g., {San Zhang:30%, Si Li:35%, Wu Wang:35%}, which is related to your final grade.

Grading

- If you choose Lunar Lander, full points = min(Base(18pts) + Bonus(5pts), 18pts)

- If you choose River Raid, full points = min(Base(20pts) + Bonus(5pts), 20pts).

- The base is determined by the score evaluated by the provided code.

- The bonus consists of two parts. The first part is the novelty of your approach, which should be highlighted in your poster and technical report. The second part is related to the readability of your code and technical report. Please make them easy to read.

Hint

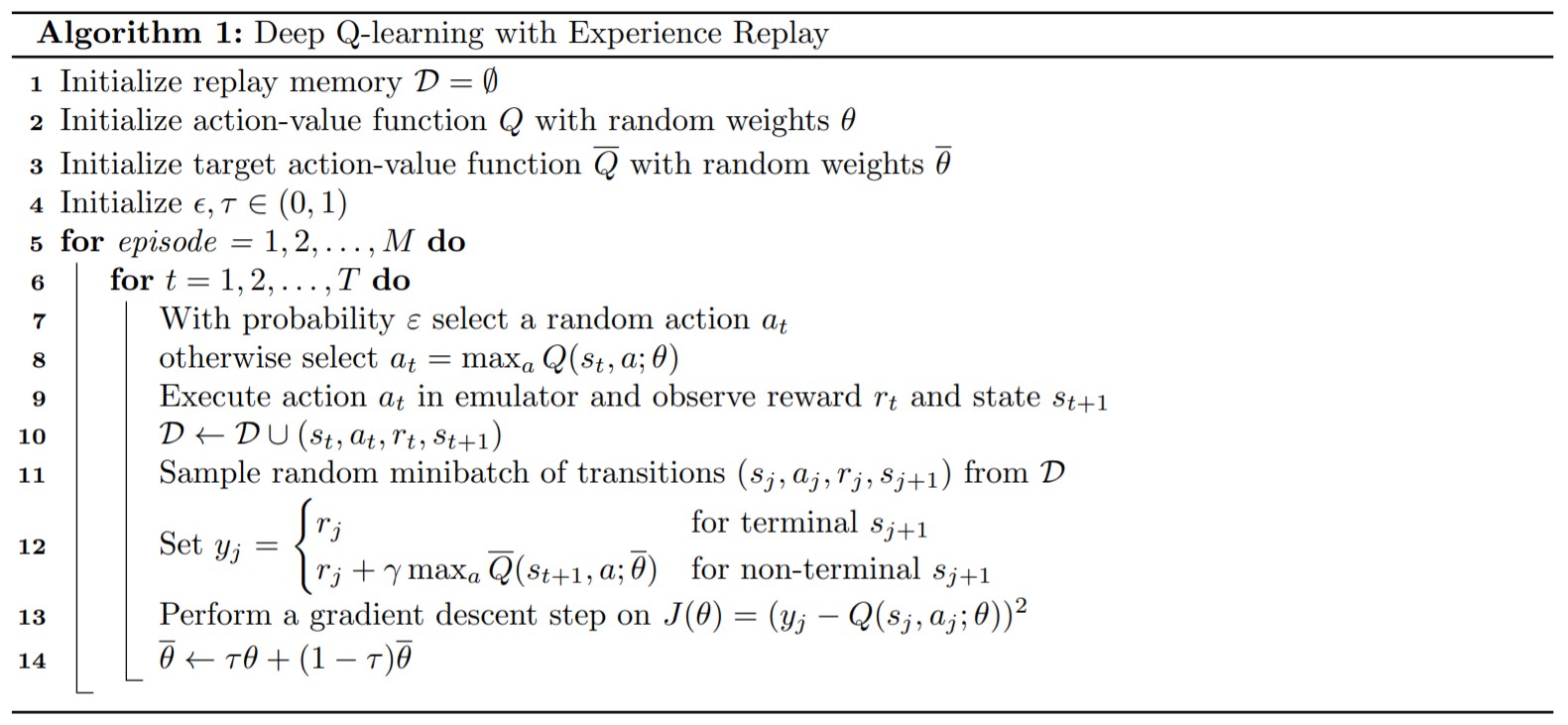

We recommend implementing Deep Q-learning in this project. The pseudocode bellow is a modified version which is easy to reproduce.

We recommend first trying out Lunar Lander to check the correctness of your code. Then, you try training your agent to play River Raid.

Due Day

- Please turn in your technical report and your trained agent before 23:59 PM, June 18, 2020.

- No late submissions will be accepted.

Page view (from Jan 1, 2021):

Downloads (from Jan 1, 2021):414